Published by Dimitri Vedeneev, Director, Cloud & Cyber Solutions, and Henry Ma, Technical Director, Strategy & Consulting

The buzz around artificial intelligence (AI) has moved beyond hype, with growing investment as organisations of all sizes seek to harness new capabilities and efficiencies.

The momentum across all industries is undeniable.

A recent Harvard Business Review Analytic Services survey found that 83% of professionals believe companies in their sector will lose ground if they fail to adopt generative AI (GenAI). That sense of urgency is driving rapid decision-making. But moving too quickly can expose organisations to unintended and costly consequences, from data exposure to misalignment with evolving regulatory expectations.

In the rush to innovate it can be easy to lose sight of the risks.

This underscores a fundamental lesson in AI adoption: the importance of taking a deliberate approach that considers your organisation’s context, use cases, and the specific security challenges these technologies introduce.

What’s Changed with AI?

Artificial intelligence is not new. Many organisations have been using machine learning and predictive models for years. What has changed recently is the rise of large language models (LLMs) powering GenAI and agentic AI platforms – many of which are now easily available to consumers.

Remember in early 2023 when everybody started talking about ChatGPT? The OpenAI chatbot tool quickly reached an estimated 100 million monthly active users in January that year, making it the fastest-growing consumer application in history at the time.

These platforms can generate new content based on vast datasets and troves of information publicly available across the internet. However, this can also include proprietary and sensitive information. They can also make decisions and take actions based on that content.

A 2024 report from the Australian Securities and Investments Commission (ASIC) noted a shift toward more complex and opaque AI models, including GenAI, forecasting exponential growth in their use. Compared to earlier models, generative systems introduce new and challenging considerations, such as difficulty interpreting and validating outputs, or ensuring sufficient security controls, particularly when models are built on third-party software with limited transparency.

Understanding these shifts is essential to managing new exposures effectively.

The Risk Landscape

As AI adoption accelerates, organisations face an expanded attack surface, with an increasing set of security and operational risks introduced by the nature of AI systems.

An emerging range of technical threats and vulnerabilities includes prompt injection, jailbreaking, model poisoning, and supply chain or cloud infrastructure compromises. If realised, these could lead to exposure of sensitive data, unexpected system behaviour causing harm to humans and disruption of business operations.

Alongside technical concerns, AI adoption introduces broader organisational challenges, including:

- Transparency and explainability of model outputs.

- Accountability and human oversight gaps.

- Procedural fairness and ethical considerations.

- Insider threats from accidental data leakage or model misuse.

- Reputational and trust damage.

- Keeping pace with fast-evolving legal and regulatory obligations.

Addressing these emerging risks requires a holistic approach that combines secure-by-design principles, clear governance, and deliberate human oversight.

Understanding your Organisation’s Approach

AI security risk varies depending on how your organisation invests in AI capabilities. While some organisations are actively building AI models and embedding security into their development cycles, the majority operate in a hybrid space, deploying commercial AI solutions that integrate organisational data and processes.

This introduces significant risks and complexities, particularly around third-party dependencies, access controls, and data handling obligations across the supply chain.

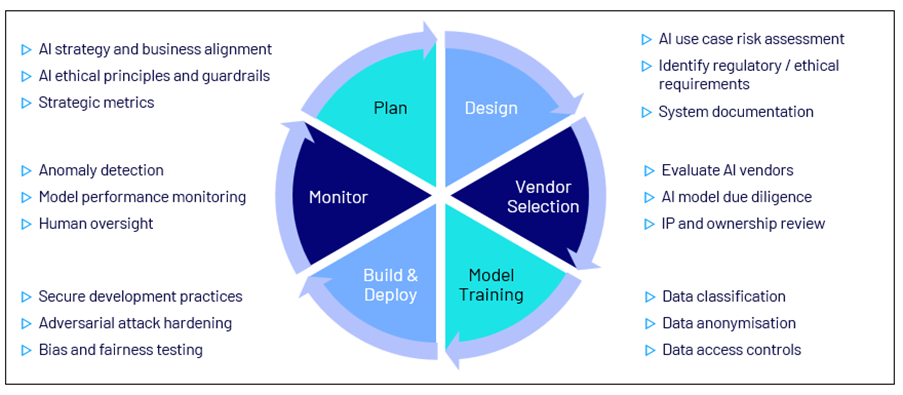

Your organisation’s AI and technology strategies shape the corresponding risk profile and determine the safeguards needed to manage it effectively. Whether embedding an AI feature or developing custom models, each stage of the AI Development Lifecycle introduces distinct security considerations. Some of these key considerations are illustrated in the diagram below.

AI Development Lifecycle

To ensure governance remains effective across these lifecycle activities, a clear understanding of who in your organisation is responsible for each activity is essential to prevent security gaps.

Securing the AI Journey

As with all digital transformation, strong security fundamentals still apply to AI adoption and use. The challenge is how to adapt these to the unique risks that AI introduces.

A helpful starting point breaks this down into four layers:

- Data: Is sensitive data adequately protected before it’s exposed to AI systems? Is it labelled, classified, and handled appropriately?

- Model: Are there controls to prevent manipulation, misuse, or leakage? Can models be tested safely before deployment? Are third-party vendors properly evaluated?

- User: Are access and usage policies clearly defined, monitored, and enforced? Do users understand their responsibilities when engaging with AI systems?

- Governance: Are organisational roles, policies, and risk appetite clearly defined for AI use? Do current security controls adapt to AI-specific challenges?

This layered approach should align with your organisation’s AI strategy, as developing, deploying, and hybrid approaches each carry different risks and responsibilities.

Wherever your organisation is on the AI journey, an adaptable and proportionate approach to security is needed to align with how AI is being used. The level and type of controls required will depend on your organisation’s specific uses cases and the risks they pose to systems, data, and people.

Securing AI is not just about reducing risk. It positions your organisation to keep pace with technological transformation and to benefit from AI that is trustworthy, sustainable, and fit-for-purpose.

This article introduces key ideas that will be explored further in our upcoming series of in-depth insights on securing AI.