Published by Dimitri Vedeneev, Executive Director Secure AI Lead, and Henry Ma, Technical Director, Strategy & Consulting on 13 February 2026

Our last blog charted the evolution of Generative AI (GenAI) into an increasingly common workplace tool and outlined the role of risk management strategies as organisations accelerate their AI adoption. But what are these risk mitigation strategies?

In this blog we explore what your organisation can do now, emphasising how an organisation’s existing cyber security controls – from data management to third-party risk management – can form the basis of managing AI risks appropriately.

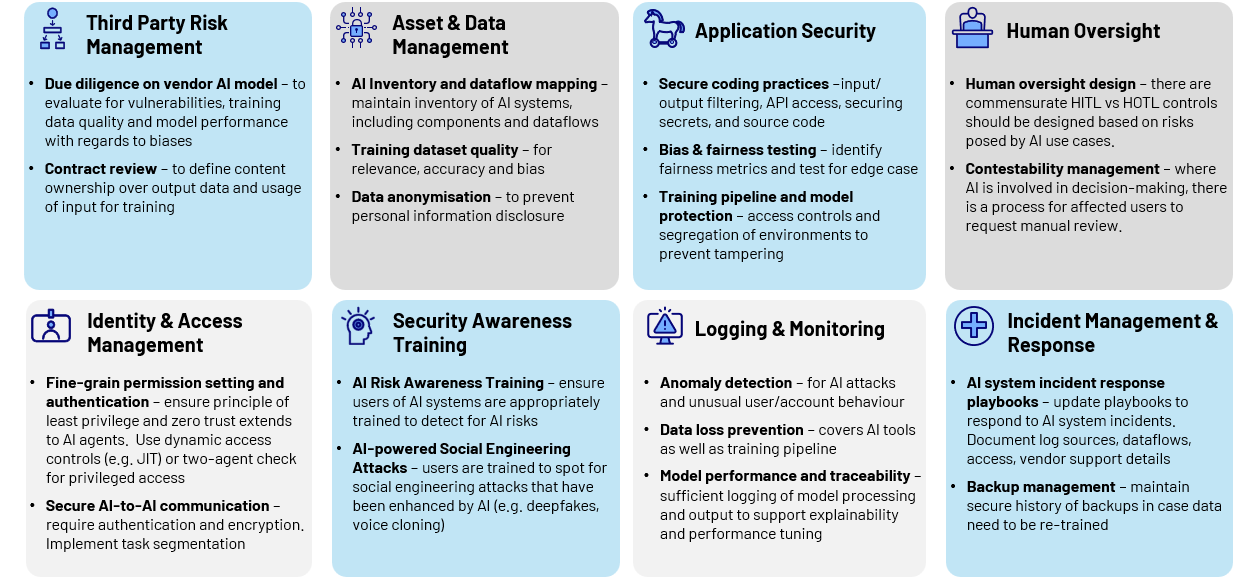

As the field of GenAI is rapidly evolving, CyberCX has identified eight areas to begin focusing on right now, to accelerate the adoption of secure AI. There may be other strategies that become more mature, as the AI software and generative AI capabilities evolve.

Key AI Risk Mitigation Strategies

1. Data Management

As the efficacy of AI systems all rely on access to data, the age-old adage of ‘you cannot protect what you don’t know’ is as relevant as ever. The added complexity here is not just knowing what data your AI system had access to, but also what permutations it went through, to arrive at the AI systems output.

If your organisation is taking the extra step of fine-tuning models for a specific business use case, then maintaining the accuracy and completeness of training data is crucial to ensure the AI system’s output remains relevant, accurate and unbiased. Preventing the accidental disclosure of personal information by applying sufficient data anonymisation forms a component of this control. Data masking can be difficult control to scale without the right data governance and classification foundations in place, as most modern large language models require clear text to operate effectively.

2. Application Security

One of the most prevalent AI adversarial attacks is prompt injection. A threat actor will craft a malicious prompt to ‘trick’ the AI system into performing an action for the actor, such as to disclose sensitive information. This is not dissimilar to SQL injection attacks that have troubled web applications for the past two decades.

While predictable input validation checks have worked in the past for deterministic systems, these need to evolve to create the right monitoring, analysis and guardrails to reduce the risk of malicious prompts being executed. It may also be prudent to supplement this with some form of output sanitisation mechanism to ensure the output generated by AI is safe. Control around application security is rapidly evolving, staying on top of the Top 10 OWASP risks for large language models is important in right sizing this strategy.

3. Identity and Access Management (IAM)

With the amount of data and systems an AI system can have access to, IAM will play a crucial role across the whole AI development lifecycle, from limiting access to training data and models to ensuring all communications and interfaces are properly secured and authenticated.

But more importantly, with organisations starting to explore Agentic AI use cases, a new challenge around managing the proliferation of machine identities is emerging. This risk can be realised in agentic system misalignment (e.g. agents deciding to delete whole codebases) or malicious human threat actors exploiting long lived credentials (e.g. stealing API keys or tokens from identity management platforms). This control will require fine grained permissions, just in time provisioning and de-provisioning of agentic and AI system tokens, as well as high levels of monitoring and traceability to identify authorisation gaps.

4. Third-Party Risk Management (TPRM)

As organisations go down the path of ‘deployer’ rather than ‘developer’ of AI systems and models, managing third-party risk appropriately becomes essential. For AI, the two new areas that need to be managed are:

- Increased due diligence on model maker, platform or source.

- Intellectual and privacy considerations around data shared with the AI system or model.

With AI systems and models delivered as essentially a ‘black box’ to organisations, key questions around where inference is taking place, where the data is stored and what training data is used to train the model become critical to ensuring organisations remain compliant with data sovereignty and privacy obligations as well as understanding whether the model is susceptible to any biases, discrimination or unfairness risks.

Further third-party considerations arise around ownership of generated content (use, vendor, owner of training data) and liability when an AI model is trained on infringed copyright data.

5. Human Oversight

With AI increasingly integrated into business processes and given more autonomy to act, having appropriate human oversight is a fine balancing act to ensure we still benefit from the efficiency gains while managing the risk of harmful action or incorrect decision-making.

Unlike the other control areas, which are more about implementing technical controls, this area is about re-designing business processes to choose where in the process to place the human oversight and what kind of oversight is needed.

Some control design types include:

- Human-in-the-loop (HITL) – where a human must explicitly make a decision before an AI system proceeds to execute a process – where allowing the AI agent to act independently exposes the enterprise to high-risk consequences.

- Human-on-the-loop (HOTL) – where a human is monitoring only – where the risk is manageable and within acceptable tolerance where an incident may occur.

User experience within/outside the loop is important to get right, in order to create an operationally effective control.

6. Security Awareness

Given the emergence of AI is creating new threat vectors for cyber criminals, user awareness of these vectors is essential to minimising risky actions from humans. The use of AI by threat actors to scale and accelerate attacks through deepfakes and other AI-enabled social engineering attacks is becoming increasingly common. Organisations and security professionals should consider how to include AI security awareness in their broader security training and awareness campaigns.

AI awareness training should be customised and tailor fit for board and executive audiences, traditional business users and administrators or developers. Each cohort have different needs, expose the enterprise to different attack surface risks and thus must be cognisant of their responsibilities and processes to securely use AI in their jobs.

7. Logging and Monitoring

While appropriate logging and monitoring will play a crucial part in identifying anomalous activities and behaviours caused by external threats like adversarial attacks, the inherent probabilistic nature of AI systems means organisations must have sufficient logging for explainability and transparency of AI decisions (kind of like showing your workings to your maths teacher).

There are two key practical implications of this:

- Additional log storage and detection logic requirements.

- Privacy implications of logging employee inputs.

Some organisations are taking a more conservative approach and logging all AI interactions, inference process and outputs. This control creates a child risk, around the storage of highly sensitive data and logging content, that traditional logging and monitoring solutions may not be fit for purpose for.

Traditional data loss prevention controls may be of some assistance, but require inputs from a variety of technical sources (e.g. endpoint, network and identity) to be integrated to provide the right balance of preventative control.

8. Incident Response

While cyber security incidents involving AI systems are still emerging, we are increasingly seeing organisations that are more mature in their AI adoption journey proactively preparing. This includes developing incident response playbooks and simulating how to respond to adversarial attacks against systems that include AI components and supply chain compromise of upstream AI systems.

One of the most common and easier to execute attacks is prompt injection leading to data loss. From a response perspective, this is not dissimilar to a data breach incident. The difficulty lies in identifying the extent of data loss, which depends on availability and comprehensiveness of logs.

Another key focus is on supply chain compromise. AI systems, especially those connected to external code bases or packages are prone to being infected via simple prompt injection; for example, contained in tainted software packages or instructions in code commit messages.

Key controls include having strong DevSecOps and code control policies, backup and recovery processes as well as isolation between developer and production environments.

Moving Forward

As organisations race forward in their AI adoption, the operational risk of using AI will grow. But mitigating these risks doesn’t need to be monumental task.

Instead, view this through the lens of uplifting existing controls rather than building a new empire. This approach enables your organisation to innovate at speed while keeping your data and your customers safe.

In our next blog, we will look at this evolving AI landscape and how organisations can navigate the contrasting approaches to AI regulation in regions like the United States and Europe.

Contributing authors: Brianna Streat and Umang Barot